‘Move fast and break things’ is still underrated advice. The idea of shipping quickly might be memetically abundant within tech, but there are many places in the economy where it is not just uncommon, but actively discouraged. There are many occasions where companies follow this advice, and win because of it.

But it is easy to let it infect the wrong part of your brain, and a fortiori your processes. It’s easy to let it constrain you in important, invisible ways. The idea that you should ship quickly forces simplicity on everything upstream of the product. You need to do the minimum required to get to a shipped product. Nothing more.

This is considered canonical advice. But it has some interesting effects:

You might strip out features that could be valuable, but aren’t an immediately obvious good – you might go against your hunches / taste in favour of what can be justified by data

You might not add instrumentation, feature flags, and other scaffolding that is hugely valuable but becomes difficult to implement in a large, existing codebase

You might not refactor your code properly when it gets messy, shoving it further and further down the timeline

None of these things are bad per se. But the idea that we should produce the absolute minimal thing at every stage – whatever is needed to push the company’s commercial goals forward – strongly disincentivises a bunch of stuff that takes a product from ‘good enough’ to ‘great’.

And truly great product teams seem to me to have a vision of excellence and integrate considerations of the longer-term into the demands of the everyday.

Most perniciously, however, the scrappy prototyping approach might produce something that is actually a bit shit?

There’s another approach: doing the deliberately difficult thing because it creates a technical and product advantage, and most importantly because it produces an outcome you’re actually proud of.

In this post, I’d like to give some examples of great companies that have done this sort of thing. I’ll call them ambitious technologists, because they have a sense of ambition – not just for the company they’re building, but for the technology that powers it.

280 North

Early in my time doing web dev, a team of Apple alums launched 280 Slides, a browser-based clone of OS X’s Keynote. 280 Slides was gorgeous; it was fast, functional, beautiful. If you were running it on a Mac, it was easy to forget you were using a web-based application at all:

It’s easy to forget how big a leap this actually was. Robust, fast, and beautiful web UI that felt and behaved like a native application just wasn’t happening in 2008.

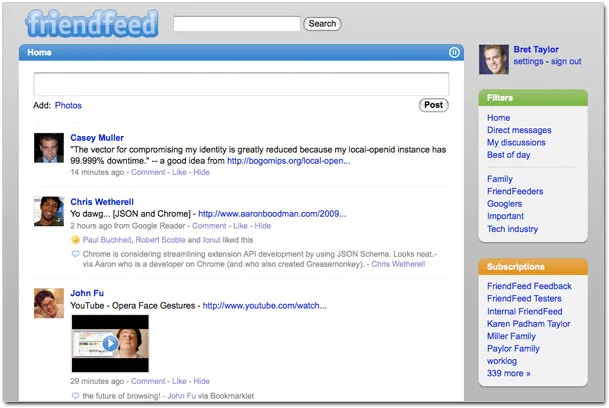

By comparison, here is the roughly-contemporaneous Friendfeed, a social media aggregator that was later acquired by Facebook:

It’s hardly hideous, and it was obviously enough to build a business and threaten the relevant room’s 800-pound gorilla into an acquisition. But this was considered decent design and product building, and it still would be: remove the bevels, flatten the colours, rewrite it using Tailwind… and it wouldn’t look out of place in a YC demo day in 2023.

How did these two teams make products of such an obvious disparity in beauty and complexity?

Friendfeed, like most other startups, built a rough-and-ready version of their system using common technologies and design patterns.

280 North wrote a new programming language. And rewrote OS X’s Cocoa library in it. So they could build the web application they wanted to see, rather than the one dragged out of them through rounds of small iterations.

They designed a language called Objective-J, implementing Smalltalk-style messaging on top of a JavaScript runtime. They then implemented a UI library called Cappuccino in Objective-J, creating a suite of tools that could produce what was then an unheard-of quality of web application.

What motivated them to do this?

Incompatibilities between varying JavaScript implementations of browser versions

The failure to standardise ECMAScript 4, and the lack of quick innovation in client-side languages

The lack of proper inheritance, code importing, dynamic dispatch, other issues that affected JavaScript the language – and the frustration that libraries needed to redesign and reimplement these things

The difficulty of writing actually richly interactive applications, applications that are meant to be competitive with desktop applications

Cappuccino gave us a web application framework, with a full set of standardised and native-like UI controls. They copied the Cocoa APIs precisely because that would produce the best quality products:

Making APIs is hard, so we just said let’s just steal the best API that exists… and of course we were Mac programmers and we feel very strongly that the Cocoa API is a really really good one; I think it’s hard to deny that the stuff on Macs looks pretty darn good, and that’s not despite the API it’s because of it

– Francisco Tomalsky at JSConf 2009

What’s important about this example is that it expressed a strong vision for what the web could (and would!) become. They rejected contemporaneous approaches, such as Flash, because these approaches didn’t meet the quality bar they thought necessary for web applications to be legitimate competitors. They wanted web-based technologies that could support desktop-style functionality: drag-and-drop, proper graphics rendering, undo/redo. They thought the web as a platform could support this, and that if web applications were to compete with the desktop, they needed to.

All of this seems obvious now, but back then it really wasn’t. It was a bold, ambitious view of what web apps could become. And it took a bold, ambitious technological strategy to get there.

Figma

The early history of Figma is another fascinating example of ambitious technologists doing something that wasn’t done, or even particularly sensible, on the move-fast-and-break-things model.

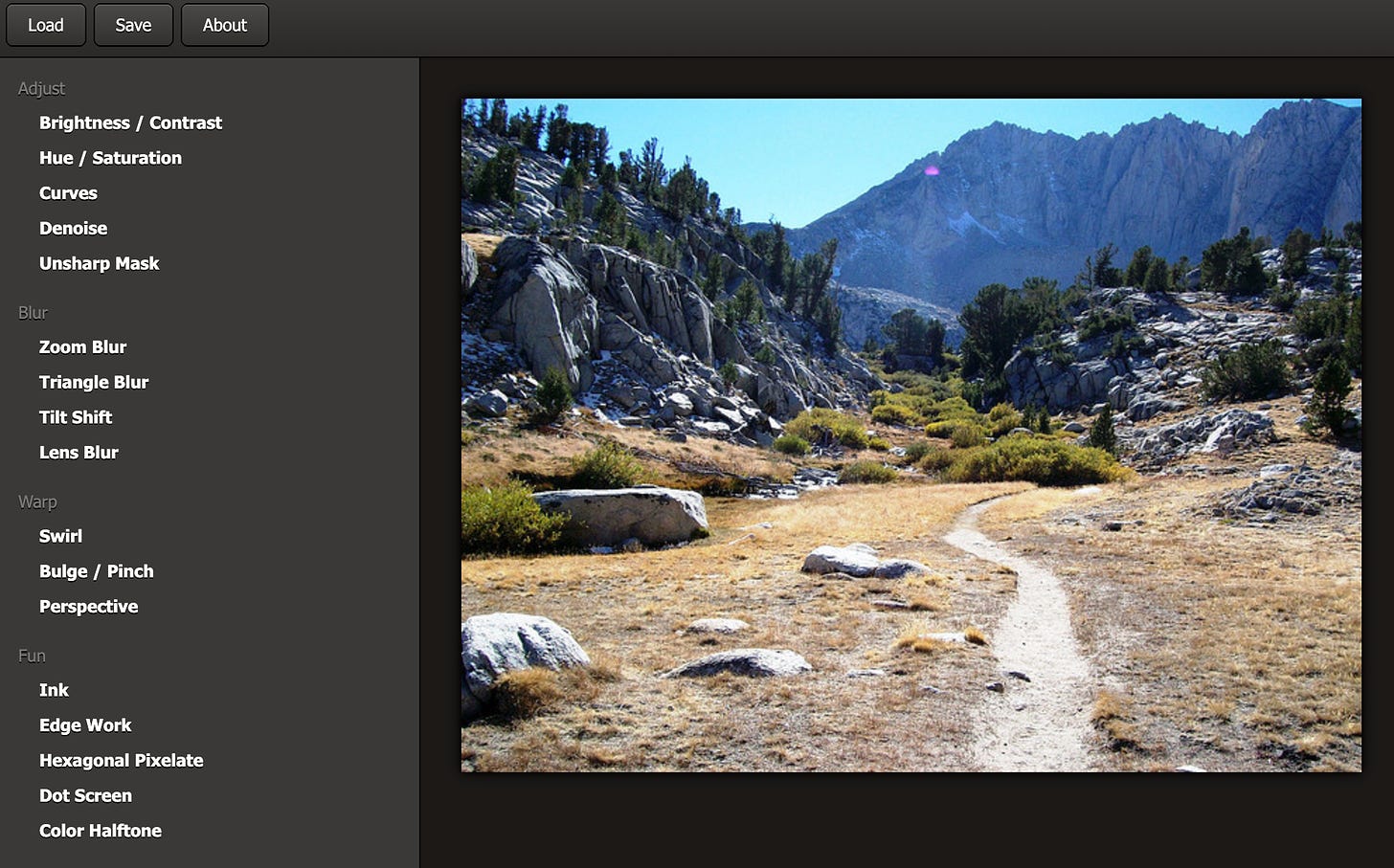

Inspired by vector- and canvas-based tooling like Sketch, Figma quickly developed a new mode of interaction for design tooling. An important part of that story was deciding to build a full graphics editing tool directly in the browser.

To do this, Figma had to implement a large amount of the underlying graphics rendering technologies using WebGL:

Play with it here. (You can see a bunch more of these demos in action here.)

…and create a programming language to help optimise it all.

When Figma started adding multiplayer support – a key part of their broader vision to speed up the feedback loop between design and development – they took the ambitious approach again, reaching back into academic research to implement an entirely realtime change management system.

These steps can be justified ex post by thinking about the main product goal of the company: to shorten significantly the feedback loop between web design and engineering. If the tooling is designed with web-native technologies, it can copy and export code directly to those technologies. If the tooling is designed with web-native technologies, it can use the inherently connected nature of the web to make collaboration and sharing easier.

But justifying it ex ante is a tough sell, unless you buy into their vision and their ability to solve tough technical problems continuously over a long period of time.

Indeed, it took them a while to get there, but they did it – and the significance of these technical advances are obvious.

The Browser Company

A more recent example of seriously ambitious technologists is The Browser Company. They build Arc, an attempt to reimagine the web browser. Their attempts so far seem to be successful: I was so impressed within the first 20 minutes of using Arc, I made it my default. Why? Because it’s so obvious they care about the product.

Perhaps the best example of them caring about the product quality comes somewhat obliquely through their recent announcement that they are writing a Swift compiler for Windows.

Browsers are complex, with acute performance needs. Browsers do a tremendous amount of processing for every web page, at every layer of the stack: networking, UI rendering, CSS layouting, JS runtime, UI rerendering in response to user input, page rerendering in response to user input. You can’t build a browser with web-based application runtimes like Electron; it’s just not fast enough. Low-level performance concerns also exclude C# (the underlying Common Language Runtime is JIT-compiled.)

Security is also an unusually important requirement for a browser, especially as more of the world’s information processing gets moved to the web. The underlying architecture of the browser, and the security posture of the technologies that power them, are crucial. Traditionally portable languages like C++ make it harder to write secure code.

Beyond performance and security, The Browser Company clearly care about introducing beauty and whimsy to their applications: a native application with UI that feels aligned with macOS is a huge part of the appeal. Swift is a language designed for creating beautiful UIs. Porting this code to Windows and rebuilding the experience in Windows-native technologies is a non-trivial problem.

So they decided to run Swift on Windows.

Which, when you think about it, is a little bit crazy.

There isn’t a full Swift environment available on Windows (although there have been some attempts). In particular, the existing Swift on Windows compiler lacked modern Swift language features, proper IDE / language server integration, instrumentation and compiler tools, as well as application-level frameworks for rendering UI and animations and all the other things that make Arc a delight to use.

They decided to tackle this problem directly, hiring a compiler team and porting the underlying language tooling to Windows. By doing the ambitious thing, they are taking a short-term hit in terms of technological risk and development time, and will give back to the community a suite of tooling to write cross-platform Swift application code.

There’s another rationale for this audacious approach: the best way to tank your product quality is to make your engineers’ lives miserable. The Browser Company have deep expertise with the Swift language and runtime, and a lot of existing code. Maintaining two code bases is a pretty significant challenge, and it’s important not to discount the benefits of code sharing – especially when you want to iterate quickly. They could give their engineering team a language and platform that they love, and enable the long-term persistence of institutional knowledge.

So far, their attempts seem successful. It doesn’t look great just yet:

But it’s a native Windows application, with native Windows UI, and I’m sure that this upfront work will have produced a much better product than the alternatives.

Superhuman and Zed

Two other examples that merit attention are Superhuman, the power-user email client, and Zed, a high-performance code editor.

Superhuman is designed for speed. But did you know they also wrote an entire CSS layouting framework in order to get the typography right? From this interview with Rahul Vohra on AcquiredFM:

The other example, David, that you may have been referring to is actually to do a typography and fonts. I'm a big typography nerd. I’ll avoid using too much jargon as I go into this example, but I really wanted everything to line up with the vertical rhythm on the page. This is really hard to do if you're just doing basic web programming. It is in fact impossible—if you have different fonts, you have graphical elements, things of different sizes—to have everything lined up on an eight pixel or a six pixel grid. But we figured out how to do it.

We dove into the Chrome source code, reverse-engineered the font layout engine, and then built our own layout framework actually entirely in CSS because we wanted this thing to be super fast as well.

Now, whenever we want to lay something out, we have a little tool but in just the font, it spits out all the metrics. This is the height of the ascender, this is the x-height, this is the cap height, this is the length of the descender.

…

It generates the CSS to automatically lay this stuff out that it looks beautiful. A lot of the reason why the superhuman.com website and the Superhuman app looks the way it does is, absolutely everything is on a sub-pixel grid to perfection because of the CSS framework.

On a move-fast-and-break-things model, it makes zero sense to rewrite a CSS layouting framework in order to achieve marginal improvements in typography rendering and increases in speed. But two out of three of the Superhuman team’s values are “Create Delight” and “Remarkable Quality”, and this is a phenomenal example of their work living up to those values. Better typography and faster rendering improves the email experience in a subtle but significant way. They did the right thing, not the easy thing, because they’d make a better product by doing so.

Zed have also put an emphasis on speed and responsiveness at the heart of what they do. This perhaps makes commercial sense for a text editor, because speed is an important dimension of competition. But it would have been a lot more common to accept the limitations of the most common underlying platform – something like Electron. Instead, the Zed team wrote their own GPU-powered graphics library.

It takes a certain kind of hubris, and commitment to quality, to build out a library of this sort. Especially since existing platforms work fine. People build editors with them! Successful editors, no less. But the Zed team wanted to build something better: they were determined to do better. So they did the ambitious thing.

Is being an ambitious technologist actually a good idea?

Probably not!

The canonical advice is often good advice. Taking on large technical challenges when you are proving the commercial viability of a product is a big risk.

But there are many cases where this advice is not good: where it encourages incremental thinking; where is cauterises progress. If we are not sufficiently bold, we will revert to the mean. And the mean isn’t where you want to be.

Your core competencies very well may be wider than you think. Taking a reductionist view on what your company should do might make sense on a priori justifications, even if it goes against your instincts and taste.

But that’s the problem with taste, hunches, intuition: it’s often really difficult to explain why you are making the calls that you do. Even if those calls are the right ones long-term.

The sad fact is that many important technological advancements are built by teams that end up losing commercially. But that isn’t always the case.

And we can honour these teams’ memories by giving ourselves a higher bar to meet. By making something we’re proud of, not just something that gets to market quickly, with the minimum amount of work.

Thanks to Arnaud Schenk and Chris Downer for discussions that led to this post, and Maurice Banerjee-Palmer for helpful comments.

This is amazing! notion is another example that comes to mind